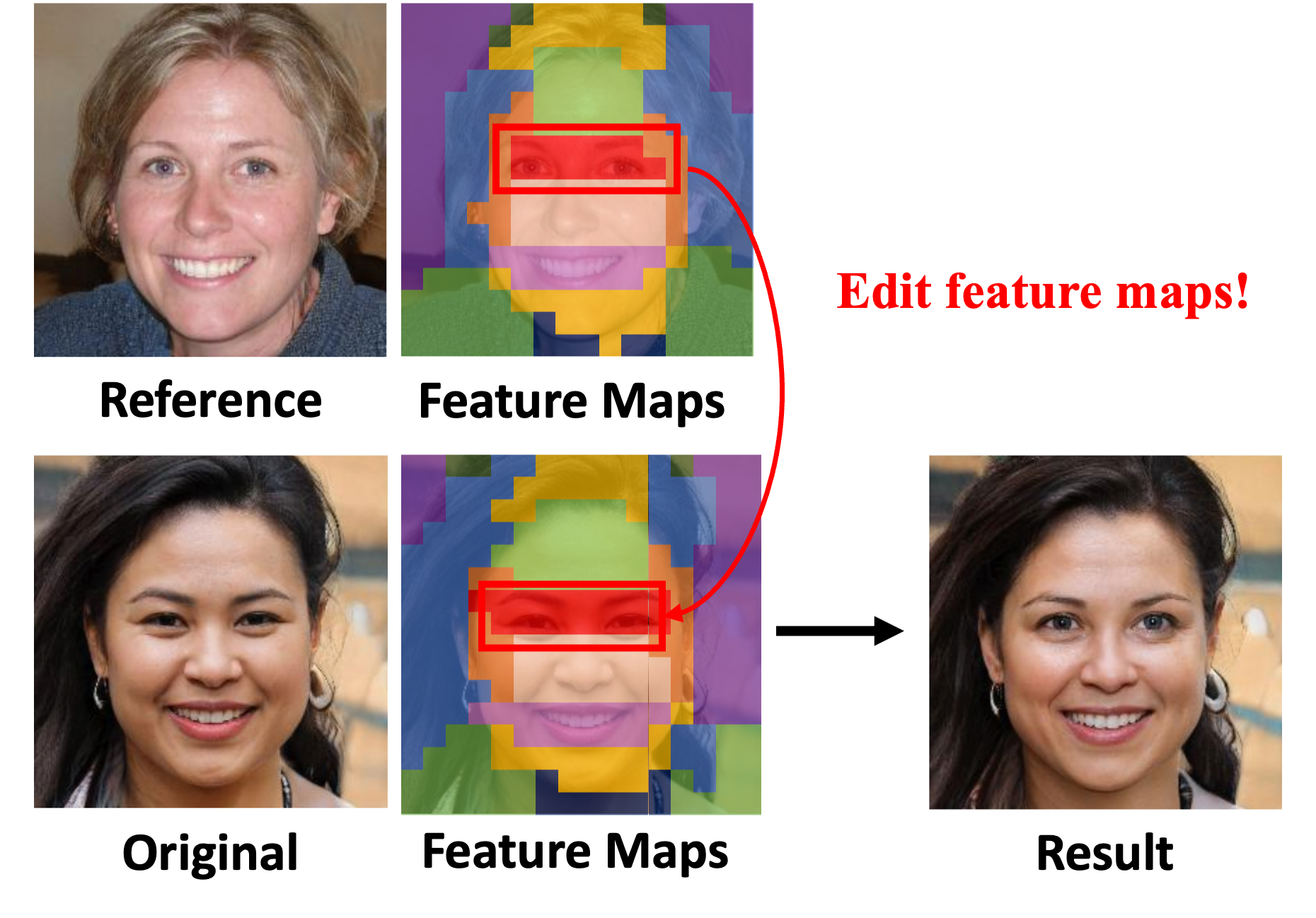

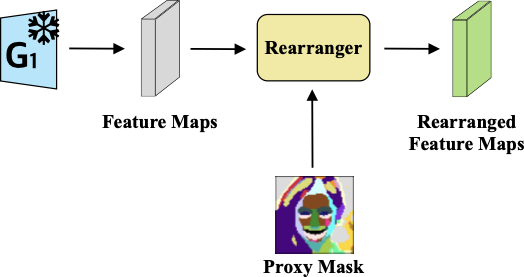

The intermediate feature maps of the generator contain the semantics of the resulting image. Therefore, editing these feature maps will result in a corresponding change to the resulting image.

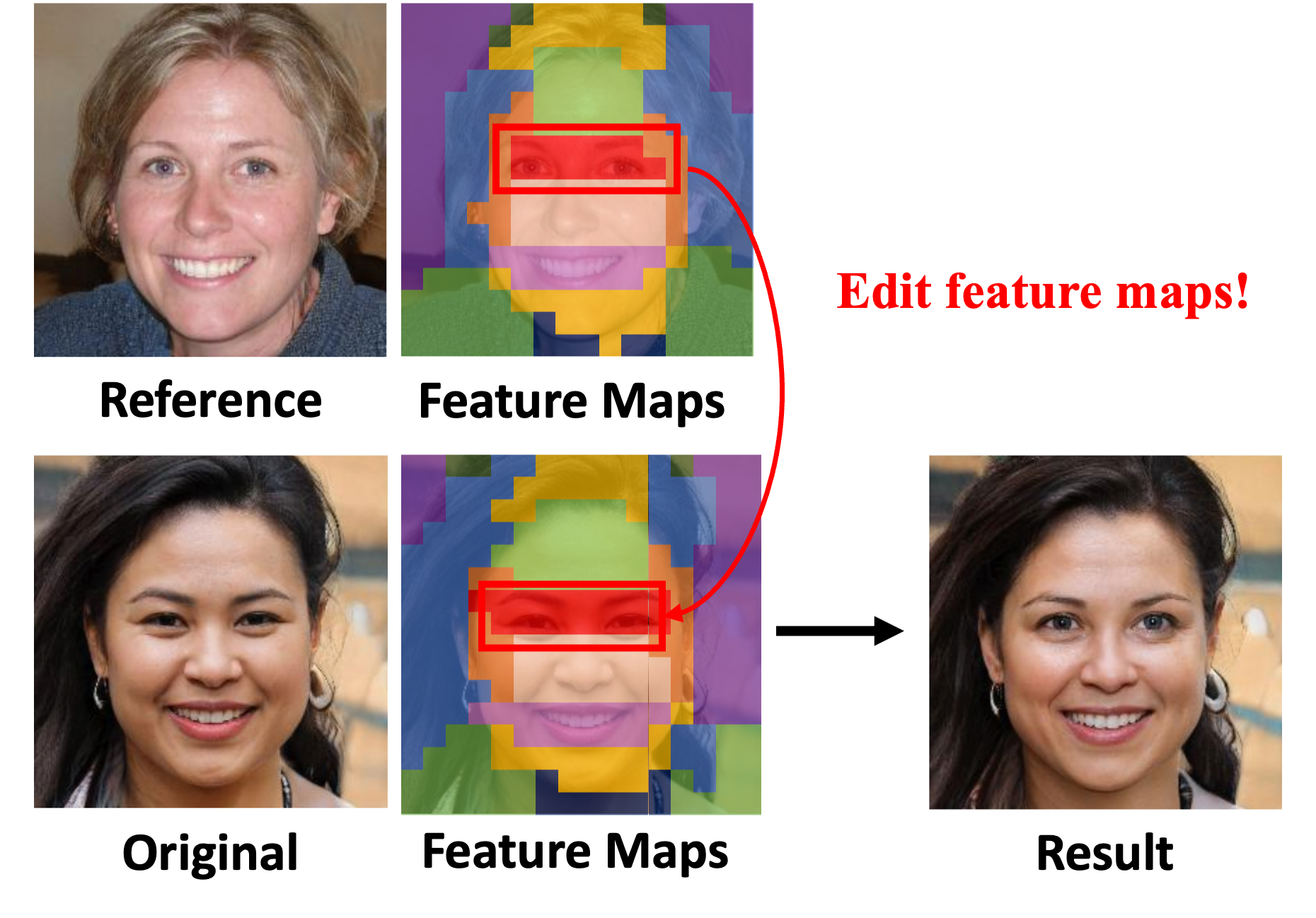

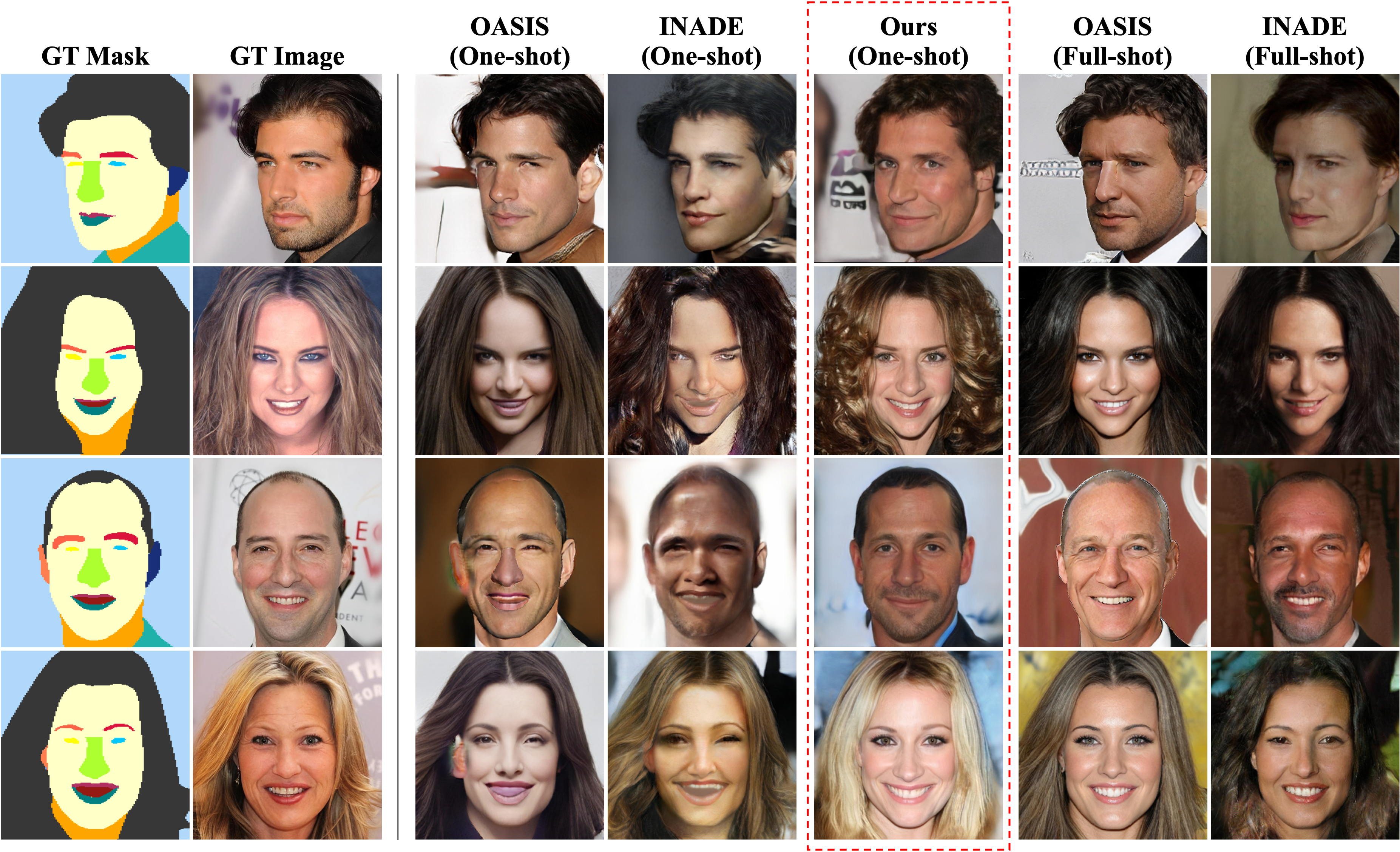

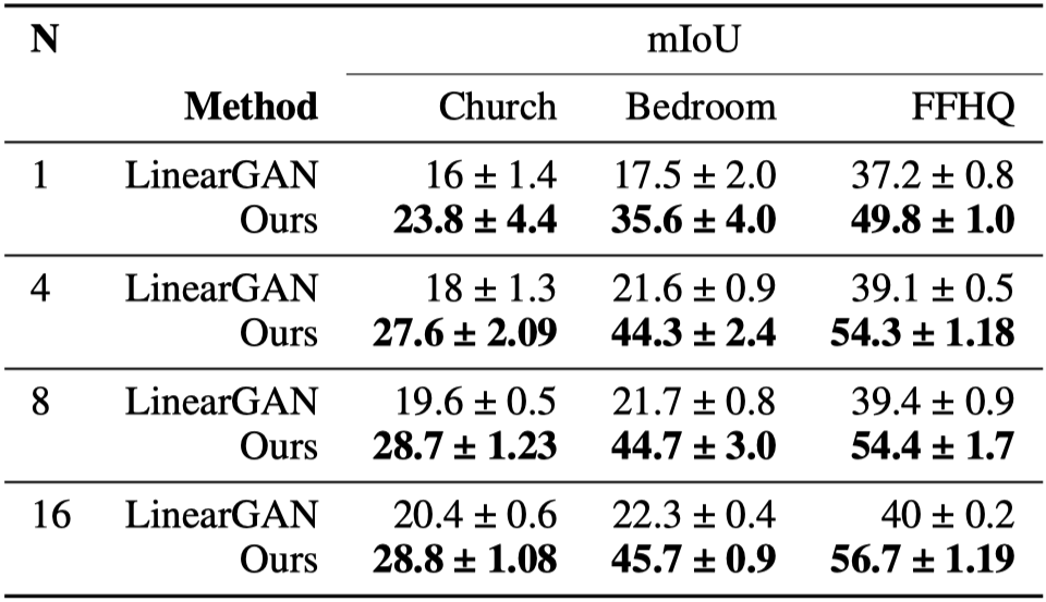

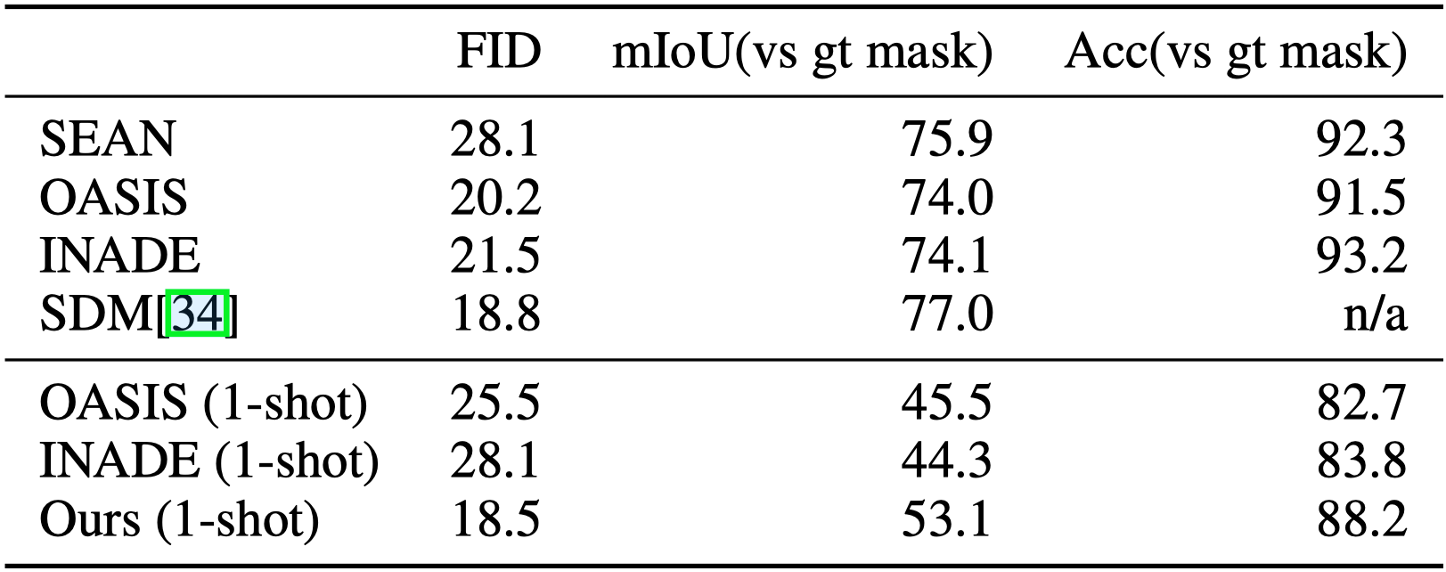

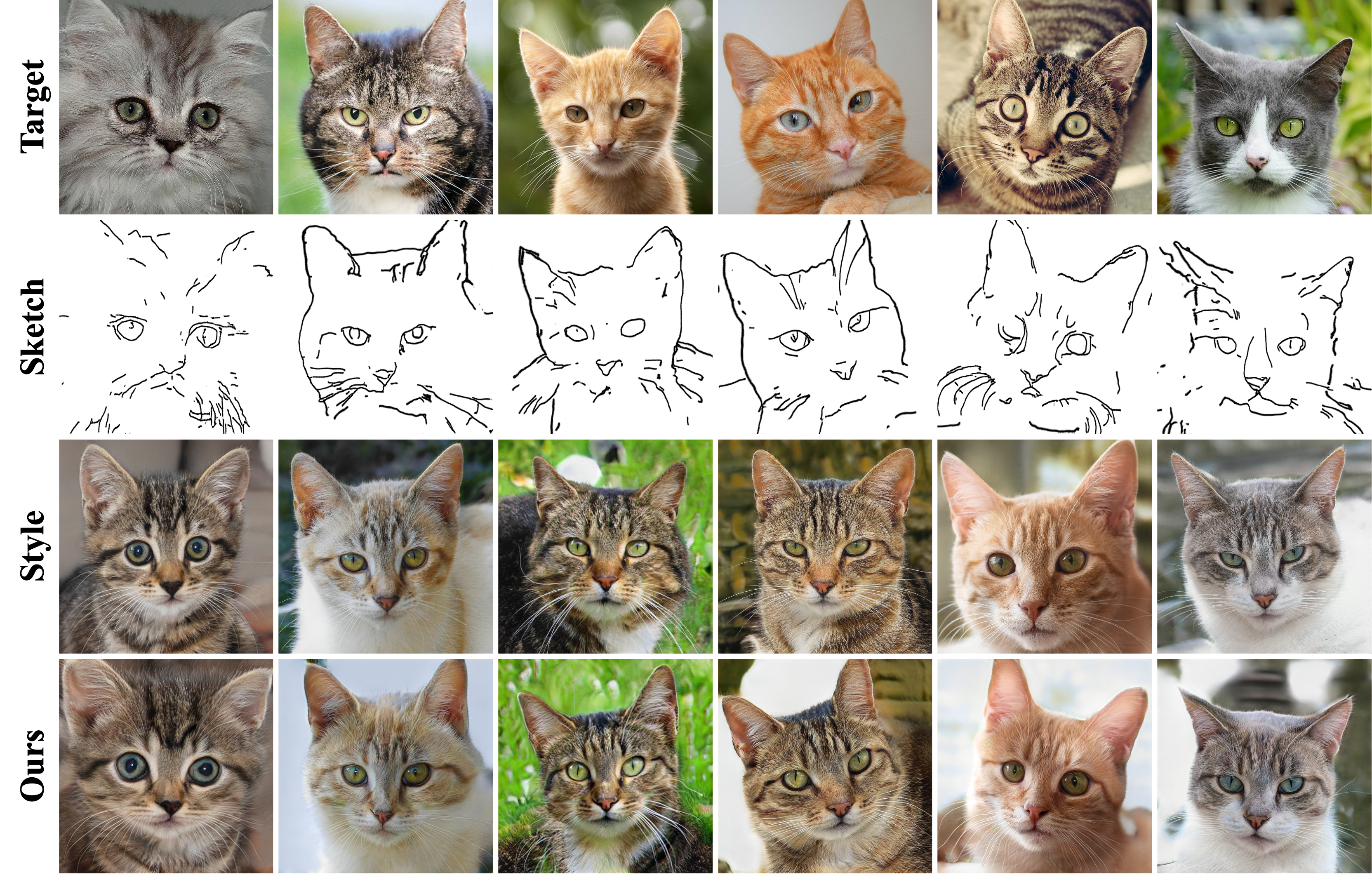

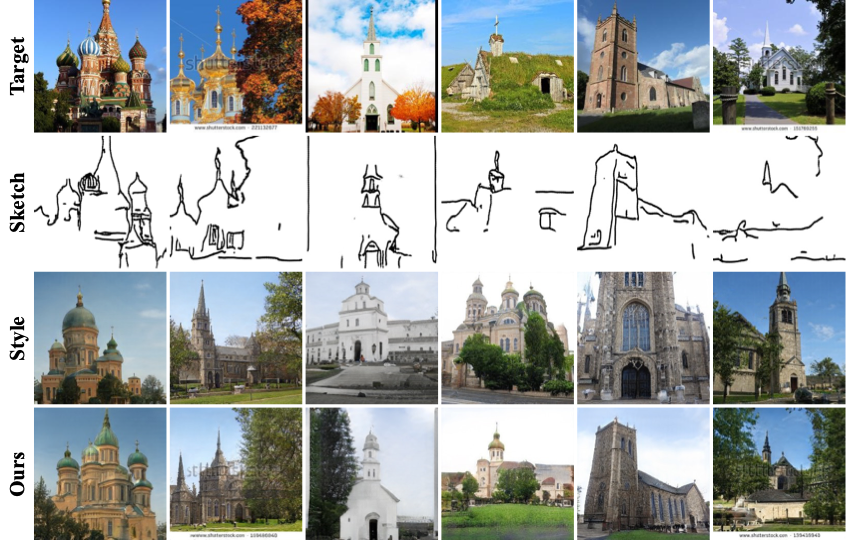

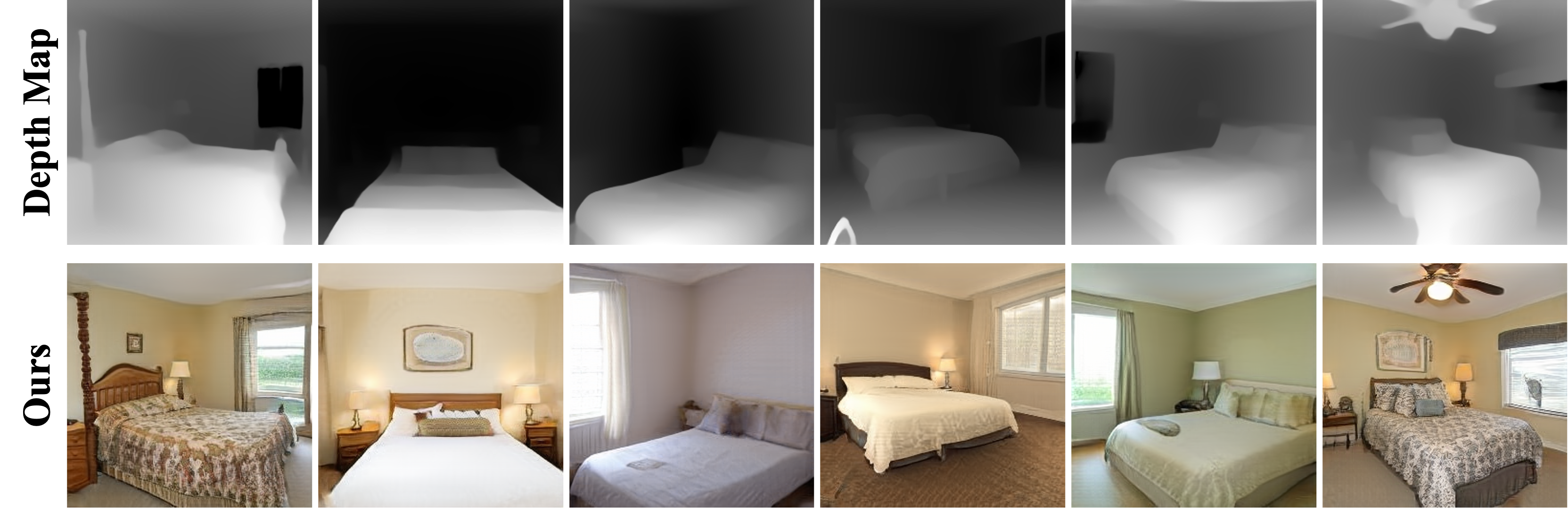

Semantic image synthesis (SIS) aims to generate realistic images that match given semantic masks. Despite recent advances allowing high-quality results and precise spatial control, they require a massive semantic segmentation dataset for training the models. Instead, we propose to employ a pre-trained unconditional generator and rearrange its feature maps according to proxy masks. The proxy masks are prepared from the feature maps of random samples in the generator by simple clustering. The feature rearranger learns to rearrange original feature maps to match the shape of the proxy masks that are either from the original sample itself or from random samples. Then we introduce a semantic mapper that produces the proxy masks from various input conditions including semantic masks. Our method is versatile across various applications such as free-form spatial editing of real images, sketch-to-photo, and even scribble-to-photo. Experiments validate advantages of our method on a range of datasets: human faces, animal faces, and buildings.

The intermediate feature maps of the generator contain the semantics of the resulting image. Therefore, editing these feature maps will result in a corresponding change to the resulting image.

Feature maps have spatial semantics that can create a semantic mask by clustering feature maps. We use a proxy mask as a paired annotation.

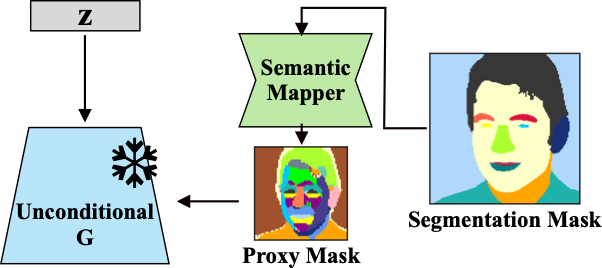

We introduce a novel method for semantic image synthesis: rearranging feature maps of a pretrained unconditional generator according to given semantic masks. We break down our problem into two sub-problems to accomplish our objectives: rearranging and preparing supervision for the rearrangement.

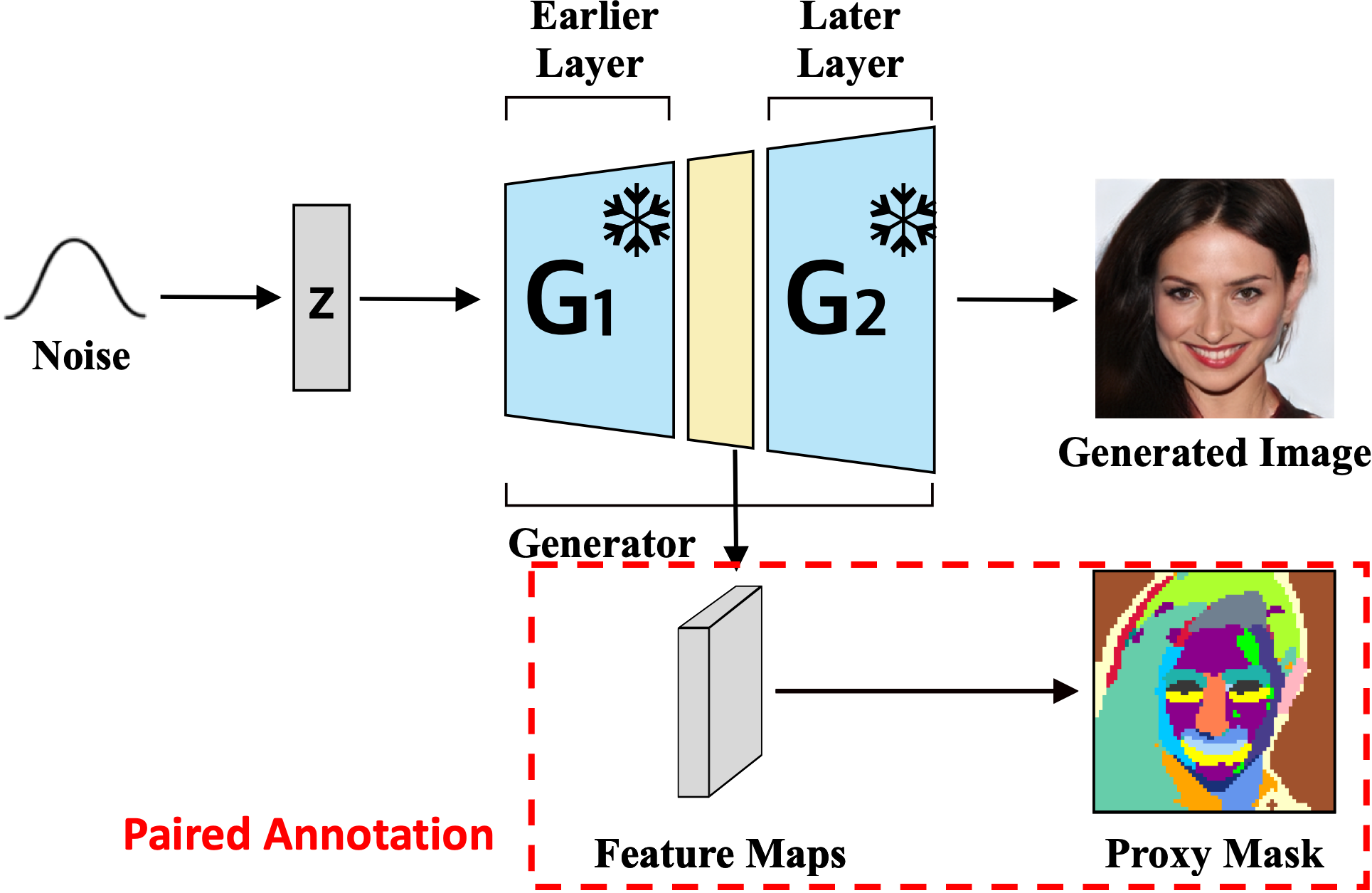

First, we design a rearranger that produces output feature maps according to input conditions by rearranging feature maps through an attention mechanism.

Second, we prepare randomly generated samples, their feature maps, and spatial clustering. The rearranger learns to rearrange the feature maps of one sample to match the semantic spatial arrangement of the other sample. However, a semantic gap may arise when the generator receives an input mask. This gap is due to the discrepancy between the input segmentation mask and the proxy mask. The proxy masks are the arrangement of feature maps derived from the feature maps in the generator through simple clustering. We solve this perception difference by training a semantic mapper to convert an input mask to a proxy mask.

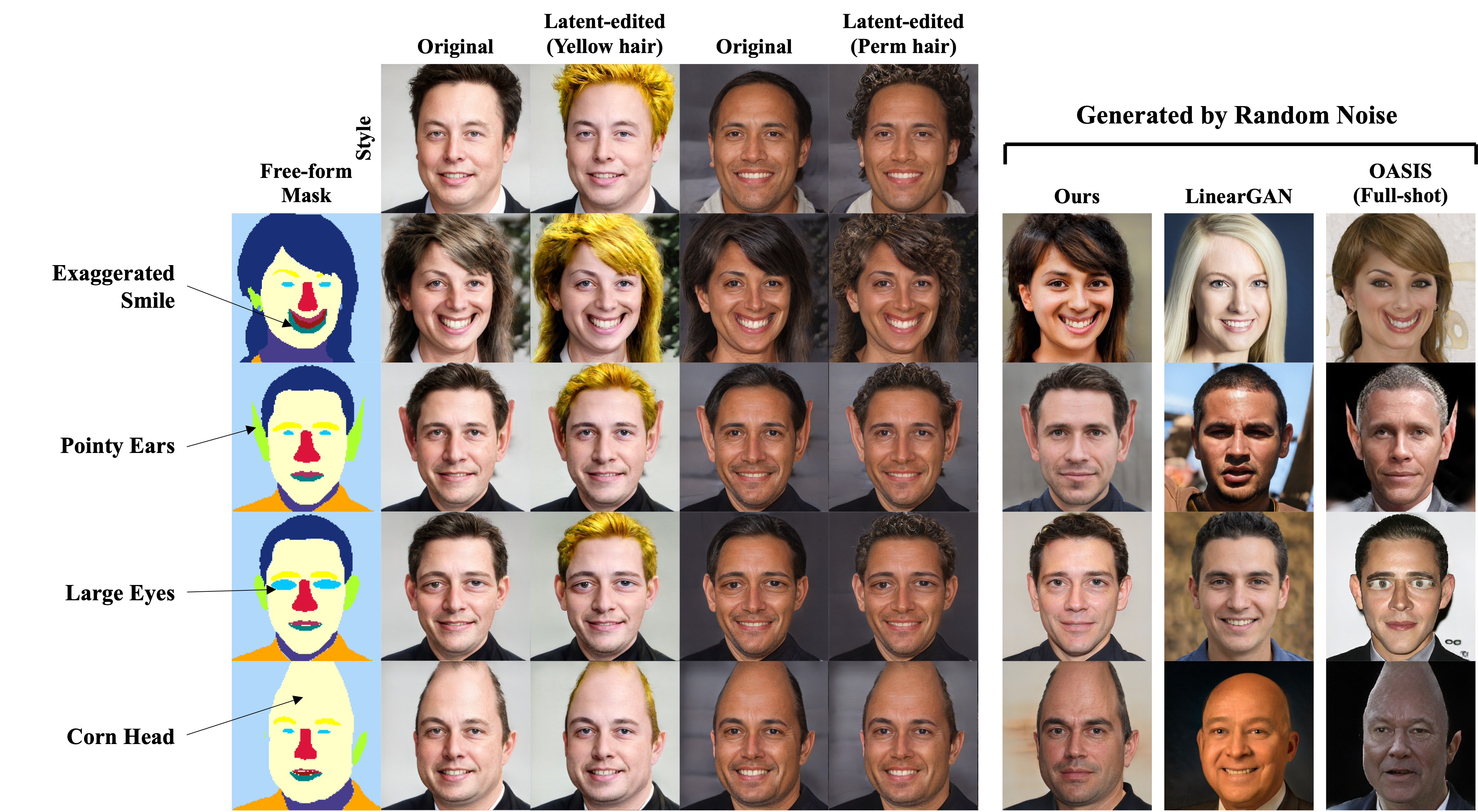

We demonstrate the flexibility of our proposed method in enabling free-form image manipulation. Our approach highlights its capability of handling masks that differ significantly from typical training distributions, such as large pointy ears and corn head.

To show the versatility of our method, we present images generated under various conditions of user sketch, HED and depth map.

Beyond the specified conditions, our model produces an output image that has a similar structure to a provided sample. The process can be done by obtaining the proxy mask of the inverted provided sample.